Week 7: Machine learning reative computing

Pre workshop tasks

Ahead of this week's workshop, we were tasked with trying out p5.js.

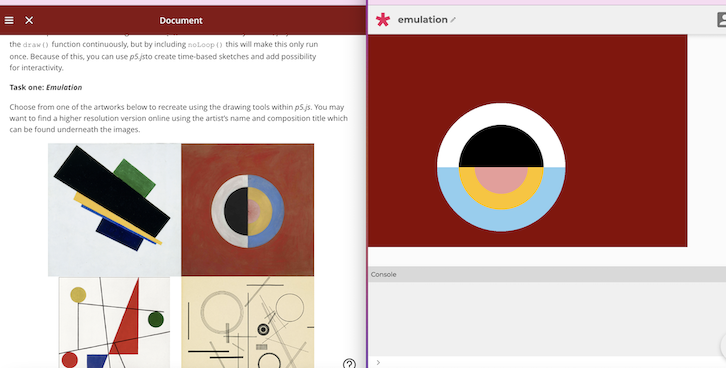

I used p5.js to attempt emulation. I initially used some pointers from the information on Minerva before actually experimenting to emulate. See below what I intended to emulate, vs the outcome; regardless of a lot of attempts and googling, I struggled to rotate to perfectly emulate the original version. This got quite frustrating, but I kept reminding myself that the concept of creative computing is indeed for fun and to be playful!

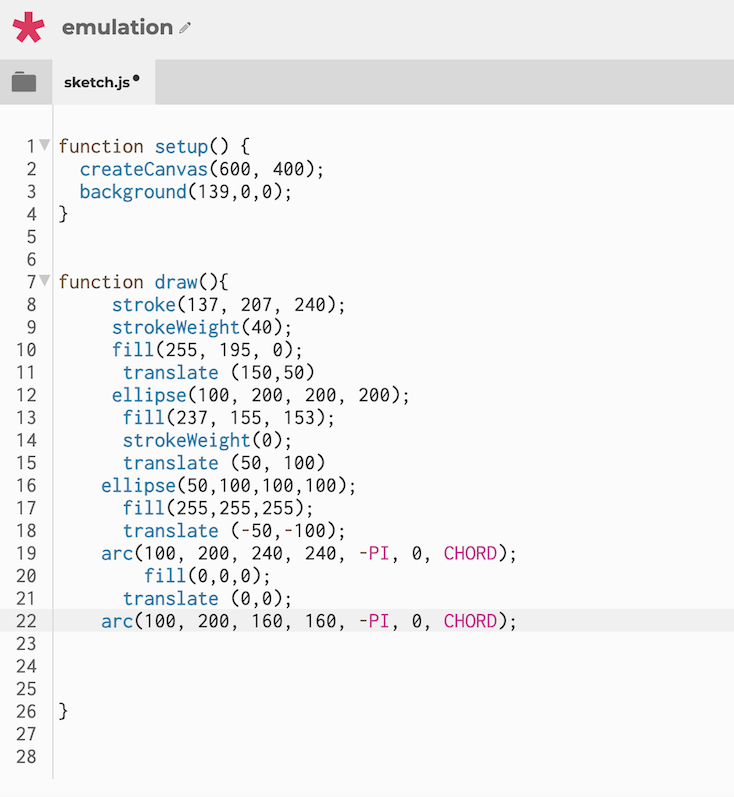

See below my code and outcome:

Machine learning

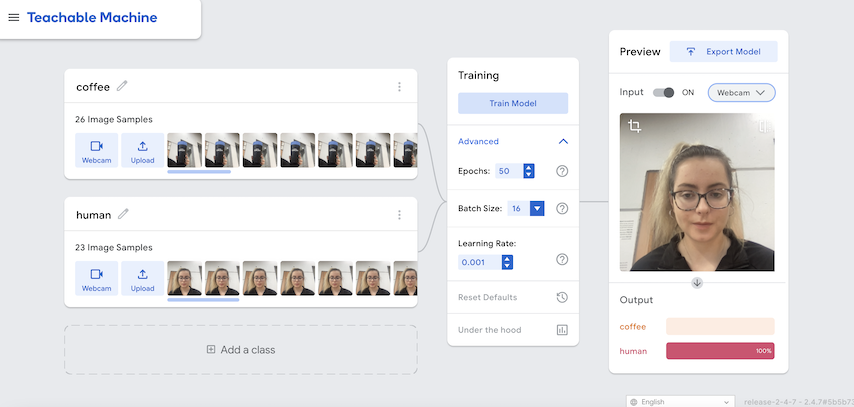

We used Teachable Machine - quite literally, a machine that you can teach to carry out certain things. There were three ways to train the machine: via image, audio and posing. I firstly trained the image option to identify what a coffee cup looks like, and what a human looks like. Its output was fairly representative: see below.

When working on this, I did think that systems such as machine learning can exhibit certain flaws. For example, in this case, the machine is taught that 'humans' are folk who look like me - and therefore associates human with a certain gender, race and so on. It is fairly easy to create problematic machines. I then considered the broader picture: for example, those who are training machine learning on a larger scale might subconsciously train it to think a human can be identified as a certain gender / race / and so on - and this can have harmful tropes in wider society.

The audio option was interesting - however it was clear that with so many different people in the workshop also trying out their own audio clips etc, I had to add a number of different classes in order to differentiate the different sounds. A sound I made to be distinguished was the tapping of my nails - but the machine often got confused with someone clicking their fingers nearby, so I had to add this as a different class. It was a fairly reliable output, but still got somewhat confused.

We then explored the installation of Teachable Machine into p5.js. I managed to install my code correctly, but then to deepen understanding, chose to look at an example which showed the movement in, and out of, the webcam space. For each moment, a different output was displayed on the screen. Similarly, I tried out the creation of a visual loudspeaker. I remember working on this during my UG Digital Media degree here, so it was a great refresher.